Multi-Threading & Mutation

On mutation, how it subtly occurs in single-threaded code, and how it can disrupt the process of upgrading single-threaded code to being multi-threaded.

On day one, to even begin programming anything, programmers must understand how to write text files, and use an interpreter, or compiler, or assembler, to turn them into executing programs. Soon after, they must roughly understand what memory is, and how to use addresses and pointers (even if they’re working in various high level languages in which those concepts masquerade under other names). This naturally follows into a basic understanding of memory allocation. An important next step is learning to write code to operate on batches of data, rather than single elements of data, for both performance and simplicity. They can then understand how to more simply manage memory, by being organized about these batches, and performing the allocations themselves as a batch, upfront. They can become more familiar with the underlying mechanisms of the CPU, how it obtains memory, performs computations, and writes memory.

At each of these milestones, the programmer’s understanding of the underlying hardware, and how to gracefully use it, broadens. And because modern hardware offers several independently-executing processor units, one of the next most crucial milestones in this journey is writing code to execute in parallel, in multiple simultaneously-executing instruction streams, rather than just a single instruction stream. This is critical for both software performance and utility. When writing user-mode code on a multitasking operating system, like myself and most developers, this is done with multi-threading.

Many of these milestones introduce new constraints which apply pressure on code to be shaped in a particular way. If a programmer solves for only some of these constraints, it’s like building a piece of furniture and tightening one particular screw fully, before even placing all other screws. Code, much like many other things, is partly a function of its environment—that is, the constraints to which it’s regularly exposed.

Multi-threading is one such milestone. Single-threaded code can be built with a different set of constraints than multi-threaded code, and as such, it is often extremely difficult to later add the “screw” of multithreading to a mostly-fully-built piece of “furniture”. In other words, if code needs to be multi-threaded, then it should be built under those constraints as early as possible; more and more of it will need to be rewritten the later those constraints are introduced.

Sometimes, it makes more sense to willingly accept the cost of rewriting, especially if it’s difficult to predict what the correct multi-threaded design for a problem will be, and a single-threaded version must be first written to explore the problem space. I think there is often too much reluctance toward rewriting systems, and too many resources put into “reusability” (before the exact circumstances of that reuse are fully understood), but I digress.

For me, multi-threading was initially an extremely intimidating milestone to tackle, because it introduces so many of these additional constraints. It seems to add an entirely new dimension to programming, and many techniques one learns to work adequately in single-threaded space suddenly become invalid in multi-threaded space.

But as I’ve learned over the past couple of years, this doesn’t mean that, at the end of the day, it always needs to be difficult. It can instead be quite easy, after internalizing these additional constraints, and designing systems accordingly. Designing such systems can obviously be tricky and dependent on one’s own ingenuity, but multi-threading being intimidating was more a function of its opacity rather than its intrinsic difficulty. In other words, hard problems are still hard, but when I was first thinking about multithreading, there were many problems that seemed like they should be easy that were also hard.

Anytime someone writes a shader, for example, they’re doing multi-threaded programming. Shadertoy proves that this can be simple for something like tens (if not hundreds) of thousands of programmers, and it mostly happens invisibly, because the underlying primitives are well-designed. I learned that I can also design underlying primitives, for a given problem, to make multithreaded programming simple.

I’ve written before about some lessons I’ve learned which have helped me internalize these additional constraints, and design multi-threaded systems. Recently, I’ve been doing much more multi-threaded programming, and I want to share more of those lessons in this post.

It’s Not How You Synchronize—It’s How You Don’t

Generally, when someone is being taught multithreaded programming, they are taught about synchronization mechanisms—for instance, atomic CPU operations, and the higher-level abstractions that they are often used to implement, like mutexes, condition variables, semaphores, and so on. And while understanding synchronization mechanisms is important, the true task of writing multithreaded systems is not figuring out how to synchronize, it’s how to not synchronize. One of the major benefits of multi-threaded code is, obviously, that it allows multiple tasks to happen simultaneously. The more synchronization a multithreaded system requires, the more this benefit dissolves. Of course, some amount of synchronization must exist in order for two systems to work together, and at those synchronization points, getting the tricky details of synchronization mechanisms is critical—but ideally, the vast majority of multi-threaded code is executing without synchronization at all.

One intrinsic characteristic of multi-threaded code is that simultaneous reads can happen without any synchronization. Writes, on the other hand, can require synchronization (with both other writes and reads)—for one touched region in memory, it is really only ever well-defined to have a single write at a time, and thus there must be some synchronized order of writes, and reads cannot occur while a write occurs. This means either reads or non-conflicting writes lend themselves better to multi-threaded code than potentially-conflicting writes.

Thus, designing multi-threaded systems which execute cleanly in parallel requires careful attention to where reads occur, where writes—mutations—occur, and what is mutated. Writes should not conflict with other threads also performing writes as often as possible, lest the system requires additional synchronization. In other words, the mutations performed by each thread should be bucketed whenever possible.

A simple example of bucketed mutations would be when many threads allocate and mutate a local variable on the stack. This is trivially conflict-free and thus requires no synchronization, because the term “the stack” actually refers to a per-thread stack, and thus each thread mutates a completely different region in memory:

void ThreadEntryPoint(...)

{

U64 x = 0;

for(U64 idx = 0; idx < 1000; idx += 1)

{

x += idx; // will *never* conflict with other threads

}

}

void EntryPoint(...)

{

LaunchThread(ThreadEntryPoint, ...);

LaunchThread(ThreadEntryPoint, ...);

}And a simple example of non-bucketed mutations would be when many threads write into the same fixed address in memory, e.g. through a static variable:

void ThreadEntryPoint(...)

{

static U64 x = 0;

for(U64 idx = 0; idx < 1000; idx += 1)

{

x += idx; // will *always* conflict with other threads

}

}

void EntryPoint(...)

{

LaunchThread(ThreadEntryPoint, ...);

LaunchThread(ThreadEntryPoint, ...);

}In the above example, the conflicting write—the non-bucketed mutation—is not properly synchronized, and so the value of x is not reliably well-defined, and thus of much use.

Bucketing Allocations & Arenas

A common example of mutation is mutating some data to allocate memory. Because allocations are a subset of mutations, they have the same characteristics in multi-threaded systems that other mutations do. So bucketing mutations wherever possible implies the rule to bucket allocations wherever possible.

Bucketing allocations for multi-threaded purposes often leads naturally to many opportunities to bucket allocations by lifetime. As such, the arena allocator is an excellent tool for bucketing allocations.

Many people have asked me about synchronization mechanisms being baked into arena allocators, such that the lowest level arena-based allocation operation—ArenaPush—is thread-safe. This is an understandable question, because other allocators—e.g. malloc—are designed to be callable from several threads at once.

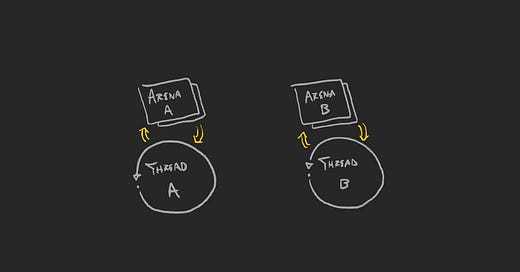

But making the basic arena operations thread-safe is putting the cart before the horse, and my reasoning for preferring read-only access and bucketed mutations in multi-threaded systems hopefully clears up why—it is assuming that the correct architecture requires synchronized access to the same arena. But the much more preferable alternative, when it is indeed possible for a given problem, is to use each arena as a per-thread bucketed allocation (and thus bucketed mutation) mechanism, such that only one thread trivially accesses an arena at a time with no synchronization.

An arena can be, of course, used along with synchronization mechanisms, such that an arena is not per-thread but rather per-data-structure, or per-hash-table-stripe, and so on, but baking in that synchronization at the lowest level is a misunderstanding of the ideal multi-threaded system, where threads execute almost entirely without synchronization (and thus almost entirely with read-only access to shared regions, or non-conflicting reads and writes). Using synchronization mechanisms with an arena is a compromise on that ideal. This is perfectly acceptable in many circumstances, since it may be for a different ideal (e.g. storing a heavy resource once, saving both memory and compute time, thus requiring synchronized access to a shared cache), but in any case, the arena implementation is more flexible, and functions well for both cases, when synchronization is user-defined, often resulting in no synchronization whatsoever.

Implicit Mutations & Work Independence

In single-threaded space, it is often convenient or useful to combine several codepaths and produce one effective codepath. But sometimes, this is done such that one low-level codepath in an effective codepath contains a mutation, and another low-level codepath in the same effective codepath contains only a read. Take the following example, from a previous post:

// retrieves value associated with `key` - if it does not

// exist inside `table`, then inserts it with initial value

// of `default_val`

Val ValFromKey(Table *table, Key *key, Val default_val);

Key key = ...;

Val val = ValFromKey(table, &key, default_val);In that previous post, I wrote about how this kind of immediate-mode API can be extremely useful in collapsing the number of low-level codepaths required in a specific problem, particularly the number of low-level codepaths responsible for maintaining complex state machines.

But in multi-threaded systems, this kind of API requires extra analysis. The effective codepath must be treated as having the mutational properties of all of its potential low-level codepaths. Thus, the effective codepath which calls ValFromKey can only be understood as mutational of its table argument. If that table is a shared cache, perhaps shared across threads, then it has potentially conflicting mutations, thus requiring synchronization.

As I discussed earlier, this may be a perfectly suitable design—for example, if I replaced Val with TextureHandle, and ValFromKey with TextureFromKey, and this API was used to do quick lookups into a shared texture cache given a key, and I expect that mostly to consist of several in-flight reads after textures are loaded (and thus not requiring synchronization with writes), then synchronization is a perfectly reasonable trade.

But the details can change. Suppose that this effective codepath is used as a helper mechanism in two other overarching codepaths. In the first case, the call to ValFromKey is mutational in 99% of cases. In the second task, the call to ValFromKey is 100% non-mutational, and thus only doing a read-only lookup.

This manifested recently on a project I work on, where a ValFromKey mechanism was being used to build a large deduplicated hash table of strings, and the same mechanism was later being used to look up nodes in that hash table and gather information from them. The system I was working on was originally written as single-threaded code, and I was doing a pass over the system to improve its performance, particularly by moving independent streams of work to execute simultaneously, with no synchronization, on multiple threads.

Of course, in the single-threaded context, this code functions perfectly correctly—but by blurring the line between mutational writes and read-only access, it gave both a 99% mutational low-level codepath and a 100% read-only codepath the same name. Of course, if I verified that indeed the latter case was 100% read-only, I could simply call it anyways as a read-only effective codepath, but this is awfully close to “cheating”—it’d only take a small number of completely innocent changes to invisibly break the system with new race conditions, and so I found it much more reasonable to explicitly separate the “mutate explicitly” and “read-only” codepaths, and use each accordingly.

After doing so, because all allocations in the system are explicit to callers with Arena parameters, I instead have an API like the following:

void TableInsert(Arena *arena, Table *table, Key *key, Val *val);

Val *TableLookup(Table *table, Key *key);With this style of API, the caller is in control of the allocation bucket via the Arena parameter. As such, the fact that TableLookup is read-only is explicit (as there is no such parameter), and thus guaranteed to function correctly in parallel with other codepaths also executing TableLookup.

In the aforementioned problem, that allowed the second codepath to be massively improved in performance—the previously single-threaded code doing 100% read-only lookups into a table could now be reorganized to execute completely in parallel.

Making Conflicting Writes Non-Conflicting, With Join Operations

But what about the case of TableInsert? It’s easy to understand the analysis of looking at the codepath responsible for building the table, and concluding that: each call to TableInsert mutates arena and table, which conflicts with other calls to TableInsert, and therefore all TableInserts must be synchronized.

And again, in some cases this may be completely reasonable. And in those cases, as I’ve found, it isn’t necessarily the end of the world. I’ve found that it’s often easy to implement a much cleverer synchronization mechanisms than—for instance—just throwing a lock around table and arena. For instance, if table is a hash table, instead of taking an Arena, TableInsert can take a different mechanism—I’ll call it a Guard—such that the insertion mechanism can map a (Guard, Hash) pair to an Arena and a read-write lock, where the Guard can have several Arenas and locks, subdividing the hash table. Thus, in order to actually require a writing lock, multiple threads need to not only be writing to the same table, but to the same “stripe” in the same table.

This already provides an improvement over the single lock and arena, but beyond this, it’s often well within reason to do much better than that, with carefully-designed atomic locking operations rather than the heavier-handed locking abstractions. But a full investigation of such techniques deserves its own post. Clever locking mechanisms, from what I can tell, seem like they’re useful in getting another 20%, 30%, or 40% out of code which already has synchronization built in, but they’re still much slower than code which requires no synchronization whatsoever.

But a simple tweak to the problem allows all calls to TableInsert to be bucketed, and thus non-conflicting. Instead of having a single table, I can also simply have many tables, and then have a separate “join” step, which allows me to combine the many tables into a single joined table. In many cases, a multi-threaded “build” step, with each thread operating without synchronization (and on separate arenas), followed by a “join” step, may be much more efficient than a multi-threaded “build” step which requires synchronized access to a single data structure.

Assume Table is a simple linked-list-chaining hash table:

struct Node

{

Node *next;

Key key;

Val val;

};

struct Slot

{

Node *first;

Node *last;

};

struct Table

{

U64 slots_count;

Slot *slots;

};A “join” operation for two Tables with the same slots_count can then be easily written as a linked-list concatenation for each slot.

Table *dst = ...;

Table *src = ...;

for(U64 idx = 0; idx < slots_count; idx += 1)

{

if(dst->slots[idx].last && src->slots[idx].first)

{

dst->slots[idx].last->next = src->slots[idx].first;

dst->slots[idx].last = src->slots[idx].last;

}

else if(src->slots[idx].first)

{

MemoryCopyStruct(&dst->slots[idx], &src->slots[idx]);

}

}If type-system-enforcement of the same slots_count is desired, then the API can be slightly tweaked to the following:

struct Table

{

// `slots_count` is omitted - chosen/passed by user

Slot *slots;

};

void TableInsert(Arena *arena, Table *table, U64 slots_count, Key *key, Val *val);

Val *TableLookup(Table *table, U64 slots_count, Key *key);

void TableJoin(Table *dst, Table *src, U64 slots_count);A “join” operation can also be extended with a number of other features, like sorting each bucket to ensure deterministic results. It can also be easily parallelized, as each slot’s “join” operation is entirely independent from the joining work for all other slots. As such, the mutations for the “join” operation are bucketed.

Closing Thoughts

Similar to my feeling after learning simpler memory management techniques, I’ve learned that multi-threaded programming becomes significantly easier when I adopt a careful, grounded, and organized approach, and when I’m closely familiar with a given problem’s details, and meticulously untangle operations by their lower level properties, rather than relying purely on stories told at higher levels of abstraction. In this case, those details include reads, conflicting writes, and non-conflicting writes.

I hope this post provided similar insight to you.

If you enjoyed this post, please consider subscribing. Thanks for reading.

-Ryan

I saw this after struggling on a multithreaded project for a few days. Its my first time dealing with these kinds of problems, and your thoughts have really helped me out. Thank you.

This is a fascinating read. It does add a little more mental "checks" when thinking about codebases though. I'm trying to keep effective code paths in mind when writing, but it's a little bit daunting knowing that moving to a multi-threaded environment would also require the consideration of read/write or sync/no-sync differences before collapsing things into a single effective code path.